GPT-3.5 Finetuning

What it means for you, and a fun test -- 8/23/2023

The Facts

Yesterday OpenAI released fine-tuning support for GPT-3.5 Turbo via their API. Until now, only the base models (not instruction fine-tuned) were available for fine-tuning.

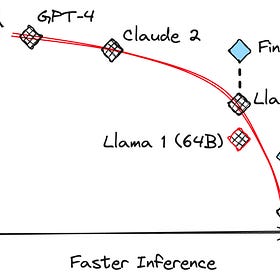

GPT-3.5 now stands as the most capable model available for finetuning, although Llama 2-70B is pretty close.

A Quick Test!

To celebrate, I decided to take the fine-tuning API on a quick test run and fine-tuned GPT-3.5 on all of my previous articles for Generally Intelligent.

Here’s what GPT-3.5 thinks I sound like:

## The Facts

Yesterday OpenAI released support for fine tuning their second most capable model, GPT-3.5.

## Why it matters

The fine tuning API is one of the most powerful tools for building AI applications. This update represents the highest quality model available for fine tuning, and will help developers build better AI applications.

It’s been almost 9 months since the last update to the fine tuning API, and there have been many advances in language models in that time. This release should be a significant improvement in performance.

## My thoughts

I’m glad to see this update! I think it will be really valuable for developers building applications on top of GPT-3.5.

I’m curious to see how much the performance has improved since the last release — I’m hopeful that this will be a big step forward.

Thanks for reading Generally Intelligent: A Daily AI Update! Subscribe for free to receive new posts and support my work.Not bad!

Why it matters

Building a fine-tuned model on my writing history with OpenAI took ~ 30 minutes, mostly spent reading their developer guide and converting my posts into training data. The key to getting fine-tuning off the ground will almost certainly be developer experience — most developers have gotten into LLMs with prompting alone, and fine-tuning cannot impose too much of an additional headache.

This release is a really important step in that direction, marking the easiest way for an average developer to start testing fine-tuning. As I’ve written recently, I think fine-tuning is the clear path forward for building powerful AI applications in the next 12 months.

Llama 2: Time to Fine Tune

The Facts Meta AI released Llama 2 yesterday, and it looks like the largest model in the class (Llama 2 - 70B) is roughly as capable as GPT-3.5: They open-sourced the model and weights with a sufficiently permissive license that most teams can use the weights commercially (they have a provision that blocks applications with 700M…

My thoughts

In the next few months, I’m hopeful we’ll see and hear about some successful applications of fine-tuning. Back in January (pre GPT-4), I knew of multiple teams having success with the OpenAI fine-tuning API — that has all but dried up as the API fell out of date. In particular, when GPT-4 fine-tuning comes this fall, I expect things to change quickly.