Authors note: Sorry I missed a few days! I was off for Juneteenth and moving this week. Back this week!

Also - we’re hosting a fireside chat about open source LLMs today at 10 am PT today with some incredible guests — Vipul Ved Prakash (CEO of Together) and Reynold Xin (Co-Founder of Databricks).

Would love it if you joined; register by clicking here!

The Facts Speculation

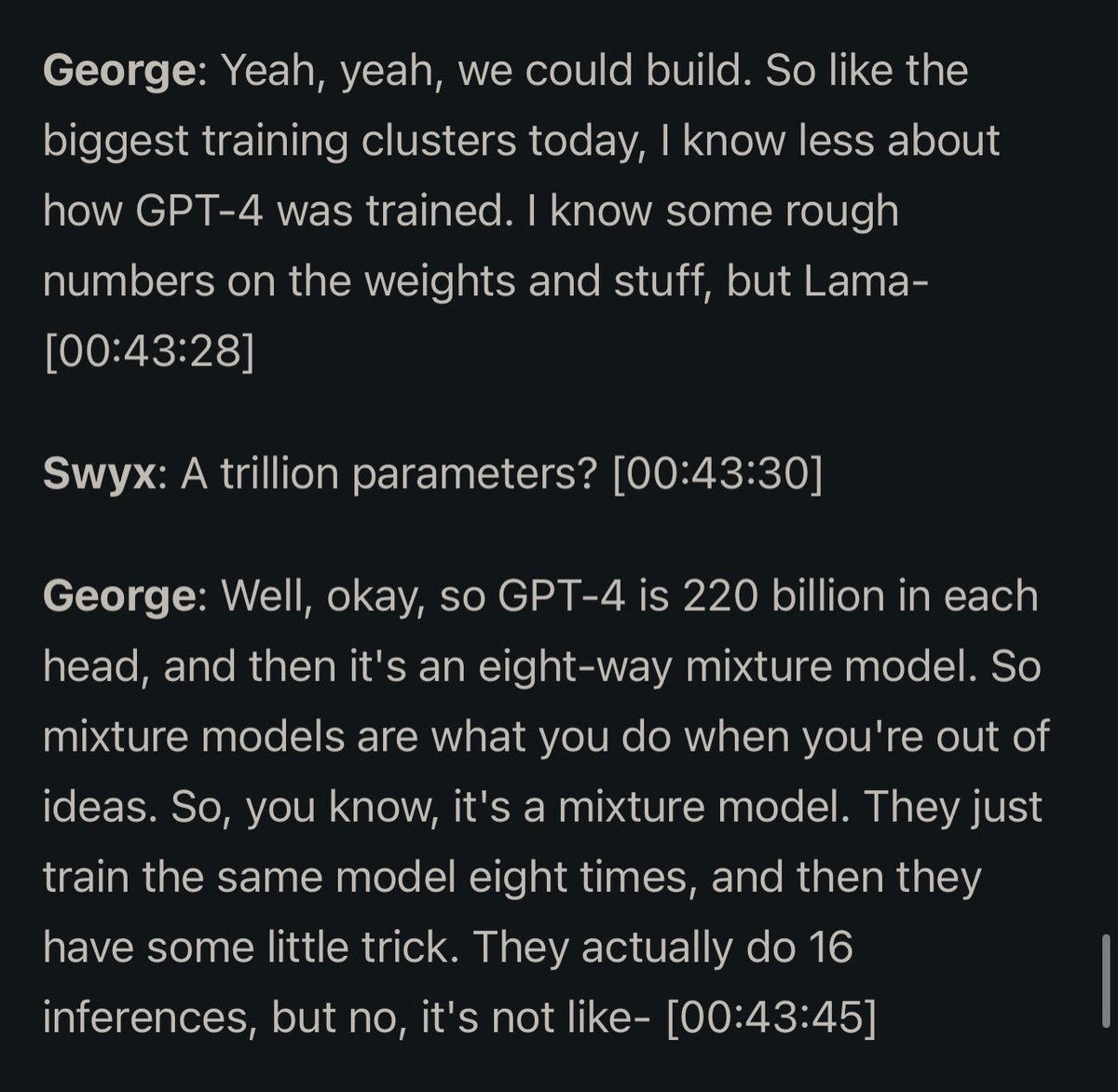

George Hotz (a.k.a. geohot) was on

’s podcast this week, and dropped some speculative / second-hand details about the GPT-4 architecture.This matches the speculation I’ve heard from various experts — GPT-4 is likely not a single massive model but rather a mixture of specialist models used to make a prediction.

Why it matters

There is an obvious limit to the extent that we can scale LLM training — the amount of high-quality training data available. Scaling models up is really only effective if you have enough data; without that having a cap (something like 220B parameters) probably makes sense. OpenAI has been investing heavily in fixing some data issues by hiring contractors to produce domain-relevant data.

What this means for the industry:

we need more high-quality, domain-specific data, and producing that data is going to be a valuable industry

Specialist models are likely the future - there probably isn’t enough data to scale up training indefinitely to produce higher-quality models.

There is a lot of room for smaller players to produce domain-specific models — training eight 250B parameter models is just as expensive as training one 2T parameter model, but if you can train the best 250B parameter model, then you likely still have a place.

My thoughts

Fun to have a bit more likely confirmation of what’s behind the GPT-4 black box — my personal thoughts:

Getting a model smarter than GPT-4 will probably take some fundamental innovation — scaling up may not be feasible, which means it might be a bit before we have a smarter model

Getting smarter for a subdomain is definitely within reach, and I’m excited to see domain-specific providers emerge.

Looks like he was right, if this leak is true (and it looks to be): https://the-decoder.com/gpt-4-architecture-datasets-costs-and-more-leaked/