Context length is not all you need

But it is nice! - 4/25/23

The Facts

Another attempt at improving the scaling properties of LLMs was released yesterday:

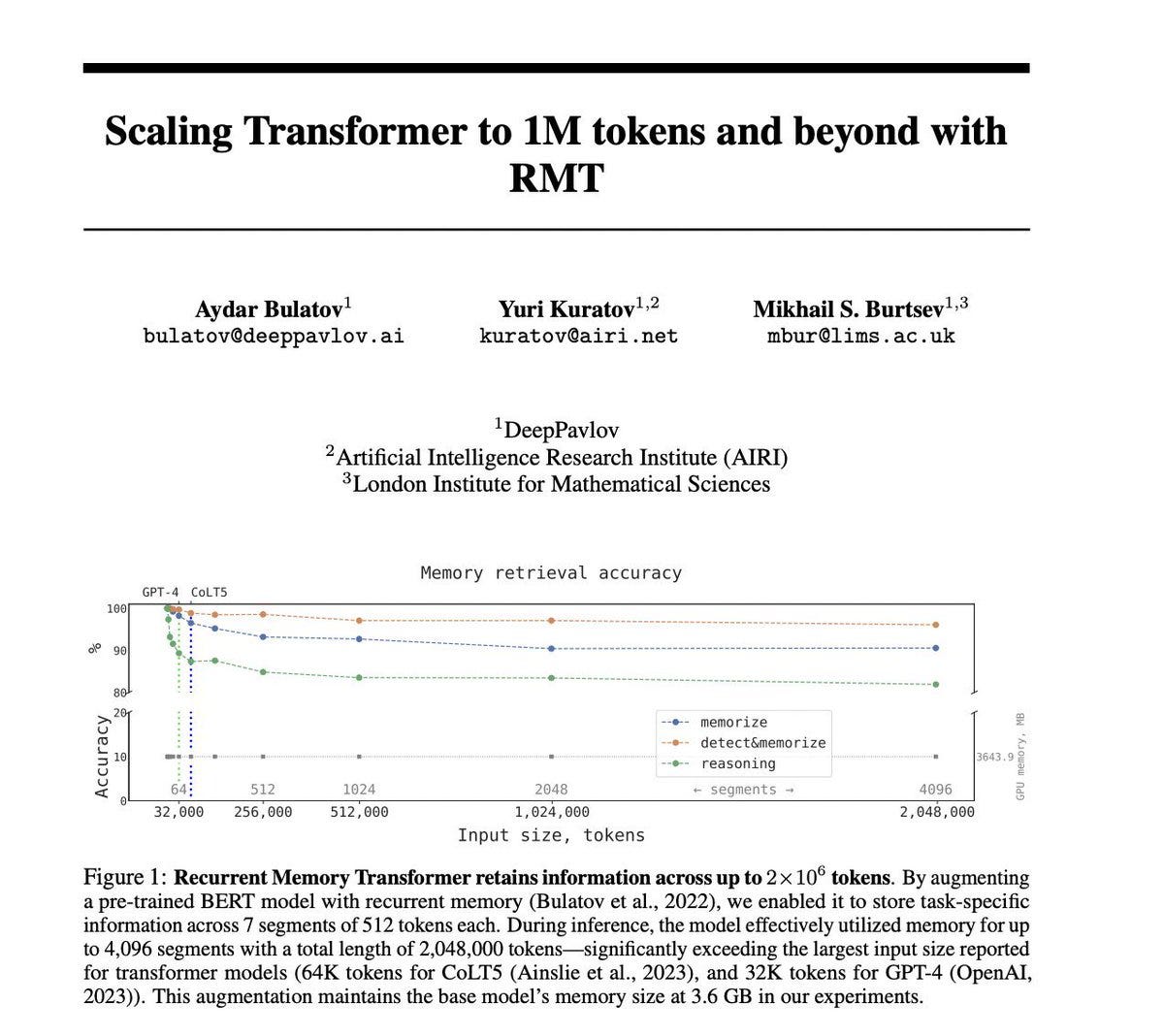

This approach uses Recurrent Memory Transformers, turning the transformer into a recurrent architecture. This recurrent architecture allows for essentially “infinite” context windows, tested up to millions of tokens.

Inference latency of this architecture scale ~ linearly with the number of input tokens. Notably, the actual paper is quite short, and less evaluation work is done than in similar papers.

Why it matters

I’ve already written my thoughts about the power of longer or infinite context lengths, but this is the most I’ve seen the community talking about it. The obvious excitement is about the use cases that this model can unlock (“Write novels!” or “Analyze whole datasets!”).

Since today is a hype day, I thought I’d use it to make the case against infinite context lengths as the solution to LLM problems: a “full” 32k token call to the GPT-4 API costs ~$2-4, and on any given day ~60 seconds. Assuming scaling is linear (like in this paper), a 2M token call would run you ~$200 and ~1.5 hours!

Simply putting data into the context of a model is clearly not the solution for every problem. While you can imagine use cases where $200 and a few hours would be a good deal (if GPT-5 can write best-selling novels, that’s a great deal!), for many others, we need to have access to a lot of data, quick and cheap.

My thoughts

Context length is not all you need. We need tools to distill models, fine tune models, and select which models to use for a given task. Information retrieval is a critical piece of the puzzle too.

LLMs are a new way of developing programmatic solutions to problems. Aa single monolithic model is not going to be the way forward; we’ll need specialized tools for different use cases.